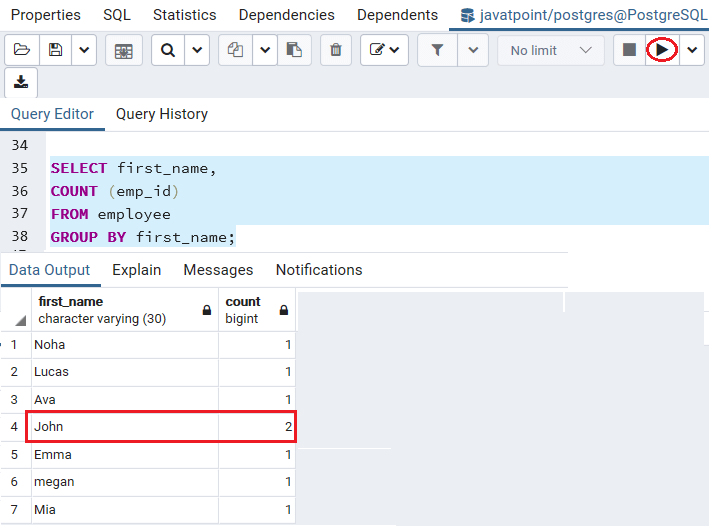

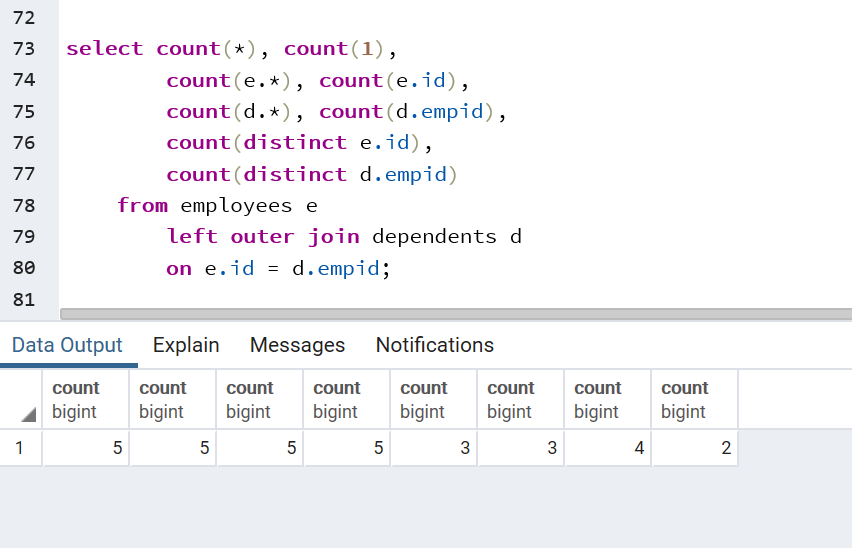

The resulting output is sorted by the schema with the maximum number of rows. The above uses a subquery to first compute the totals row count per table and performs a GROUP BY operation to get the total number of rows in each schema of the current database. WHERE table_schema NOT IN ('pg_catalog', 'information_schema') SELECT table_schema, SUM(row_count) AS total_rows FROM (Ĭount_rows_of_table(table_schema, table_name) AS row_count This can be achieved by using the following query. Next, let us say we want to get the total row count across all tables broken down per schema. table_schema | table_name | count_rows_of_table If we run the above query on our test database, we should see the following output. The query above outputs a table that contains the row counts of all tables across the various schemas, first sorted by the table_schema column and for each table schema, sorted by the tables with the largest number of rows. Table_schema not in ('pg_catalog', 'information_schema') selectĬount_rows_of_table(table_schema, table_name)

We then call the function we defined in the previous section to get the row count for each table. Because we are mainly interested in the user tables, we filter out all tables belonging to pg_catalog and information_schema, which are system schemas.

The information_schema.tables table in the system catalog contains the list of all tables and the schemas they belong to. example=# SELECT count_rows_of_table('northwind', 'orders') You can test the above function by passing in a table (for example, the orders table loaded from the Northwind dataset) as shown below. Select count(*) from "?schema"."?tablename" This function can subsequently be used in various types of queries to print the desired row counts in the various scenarios. Note that this function must be owned by a suitably privileged user, in our example we will use the yugabyte user. We’ll solve this problem by first creating a user defined function (UDF), count_rows_of_table which counts the number of rows in a single table. This means that the high-level approach to solving this problem is identical in the case of both PostgreSQL and YugabyteDB. Recall that YugabyteDB re-uses the native PostgreSQL codebase for its query layer (or the SQL processing layer) of the database.

create table "Some Exotically Named Table"( An instance of a table and a column with an exotic name is shown below. Also, note that the programmatic generation of SQL queries using catalog tables needs to handle exotic names properly. The examples in this blog post, which are essentially dynamic SQL queries on the system catalog tables, must be done with superuser privileges. We will create an example database, import two popular SQL datasets – Northwind and SportsDB, and run through the above scenarios on these example databases. Aggregate row count across all tables in the database.Aggregate row counts per schema of the database.Row counts broken down per table in the schema.

#Postgresql count how to#

This blog post outlines how to get the following row counts of tables in a database: While there are a number of use cases for this, my scenario was to get the per-table row counts of all tables in PostgreSQL and YugabyteDB as a first sanity check after migrating an application with the pre-existing data from PostgreSQL to YugabyteDB. Getting total row counts of data in tables across various dimensions (per-table, per-schema, and in a given database) is a useful technique to have in one’s tool belt of SQL tricks.

0 kommentar(er)

0 kommentar(er)